import { T as ToneAudioNode, o as optionsFromArguments, P as Param, r as readOnly, g as getContext, _ as __awaiter, O as OfflineContext, s as setContext, c as ToneAudioBuffer, d as Source, e as assert, b as ToneBufferSource, V as Volume, f as isNumber, h as isDefined, j as connect, k as Signal, G as Gain, l as connectSeries, m as SignalOperator, n as Multiply, p as disconnect, q as Oscillator, A as AudioToGain, t as connectSignal, a as ToneAudioBuffers, u as noOp, v as Player, C as Clock, w as intervalToFrequencyRatio, x as assertRange, y as defaultArg, W as WaveShaper, z as ToneConstantSource, B as TransportTimeClass, D as Monophonic, E as Synth, H as omitFromObject, I as OmniOscillator, J as Envelope, K as writable, L as AmplitudeEnvelope, N as deepMerge, Q as FMOscillator, R as Instrument, U as getWorkletGlobalScope, X as workletName, Y as warn, Z as MidiClass, $ as isArray, a0 as ToneWithContext, a1 as StateTimeline, a2 as TicksClass, a3 as isBoolean, a4 as isObject, a5 as isUndef, a6 as clamp, i as isString, a7 as Panner, a8 as gainToDb, a9 as dbToGain, aa as workletName$1, ab as ToneOscillatorNode, ac as theWindow } from './common/index-b6fc655f.js';

export { aL as AMOscillator, L as AmplitudeEnvelope, A as AudioToGain, aB as BaseContext, as as Buffer, au as BufferSource, at as Buffers, aP as Channel, C as Clock, aA as Context, ak as Destination, ao as Draw, aH as Emitter, J as Envelope, Q as FMOscillator, aN as FatOscillator, F as Frequency, aC as FrequencyClass, G as Gain, aI as IntervalTimeline, am as Listener, al as Master, ae as MembraneSynth, M as Midi, Z as MidiClass, n as Multiply, O as OfflineContext, I as OmniOscillator, q as Oscillator, aO as PWMOscillator, aQ as PanVol, a7 as Panner, P as Param, v as Player, aM as PulseOscillator, S as Sampler, k as Signal, aR as Solo, a1 as StateTimeline, E as Synth, aF as Ticks, a2 as TicksClass, aE as Time, aD as TimeClass, aJ as Timeline, c as ToneAudioBuffer, a as ToneAudioBuffers, T as ToneAudioNode, b as ToneBufferSource, ab as ToneOscillatorNode, ai as Transport, aG as TransportTime, B as TransportTimeClass, V as Volume, W as WaveShaper, j as connect, l as connectSeries, t as connectSignal, aq as context, a9 as dbToGain, ax as debug, y as defaultArg, p as disconnect, ay as ftom, a8 as gainToDb, g as getContext, af as getDestination, ap as getDraw, an as getListener, aj as getTransport, ah as immediate, w as intervalToFrequencyRatio, $ as isArray, a3 as isBoolean, h as isDefined, aK as isFunction, ad as isNote, f as isNumber, a4 as isObject, i as isString, a5 as isUndef, ar as loaded, az as mtof, ag as now, o as optionsFromArguments, s as setContext, av as start, aw as supported, aS as version } from './common/index-b6fc655f.js';

/**

* Wrapper around Web Audio's native [DelayNode](http://webaudio.github.io/web-audio-api/#the-delaynode-interface).

* @category Core

* @example

* return Tone.Offline(() => {

* const delay = new Tone.Delay(0.1).toDestination();

* // connect the signal to both the delay and the destination

* const pulse = new Tone.PulseOscillator().connect(delay).toDestination();

* // start and stop the pulse

* pulse.start(0).stop(0.01);

* }, 0.5, 1);

*/

class Delay extends ToneAudioNode {

constructor() {

super(optionsFromArguments(Delay.getDefaults(), arguments, ["delayTime", "maxDelay"]));

this.name = "Delay";

const options = optionsFromArguments(Delay.getDefaults(), arguments, ["delayTime", "maxDelay"]);

const maxDelayInSeconds = this.toSeconds(options.maxDelay);

this._maxDelay = Math.max(maxDelayInSeconds, this.toSeconds(options.delayTime));

this._delayNode = this.input = this.output = this.context.createDelay(maxDelayInSeconds);

this.delayTime = new Param({

context: this.context,

param: this._delayNode.delayTime,

units: "time",

value: options.delayTime,

minValue: 0,

maxValue: this.maxDelay,

});

readOnly(this, "delayTime");

}

static getDefaults() {

return Object.assign(ToneAudioNode.getDefaults(), {

delayTime: 0,

maxDelay: 1,

});

}

/**

* The maximum delay time. This cannot be changed after

* the value is passed into the constructor.

*/

get maxDelay() {

return this._maxDelay;

}

/**

* Clean up.

*/

dispose() {

super.dispose();

this._delayNode.disconnect();

this.delayTime.dispose();

return this;

}

}

/**

* Generate a buffer by rendering all of the Tone.js code within the callback using the OfflineAudioContext.

* The OfflineAudioContext is capable of rendering much faster than real time in many cases.

* The callback function also passes in an offline instance of [[Context]] which can be used

* to schedule events along the Transport.

* @param callback All Tone.js nodes which are created and scheduled within this callback are recorded into the output Buffer.

* @param duration the amount of time to record for.

* @return The promise which is invoked with the ToneAudioBuffer of the recorded output.

* @example

* // render 2 seconds of the oscillator

* Tone.Offline(() => {

* // only nodes created in this callback will be recorded

* const oscillator = new Tone.Oscillator().toDestination().start(0);

* }, 2).then((buffer) => {

* // do something with the output buffer

* console.log(buffer);

* });

* @example

* // can also schedule events along the Transport

* // using the passed in Offline Transport

* Tone.Offline(({ transport }) => {

* const osc = new Tone.Oscillator().toDestination();

* transport.schedule(time => {

* osc.start(time).stop(time + 0.1);

* }, 1);

* // make sure to start the transport

* transport.start(0.2);

* }, 4).then((buffer) => {

* // do something with the output buffer

* console.log(buffer);

* });

* @category Core

*/

function Offline(callback, duration, channels = 2, sampleRate = getContext().sampleRate) {

return __awaiter(this, void 0, void 0, function* () {

// set the OfflineAudioContext based on the current context

const originalContext = getContext();

const context = new OfflineContext(channels, duration, sampleRate);

setContext(context);

// invoke the callback/scheduling

yield callback(context);

// then render the audio

const bufferPromise = context.render();

// return the original AudioContext

setContext(originalContext);

// await the rendering

const buffer = yield bufferPromise;

// return the audio

return new ToneAudioBuffer(buffer);

});

}

var Units = /*#__PURE__*/Object.freeze({

__proto__: null

});

/**

* Noise is a noise generator. It uses looped noise buffers to save on performance.

* Noise supports the noise types: "pink", "white", and "brown". Read more about

* colors of noise on [Wikipedia](https://en.wikipedia.org/wiki/Colors_of_noise).

*

* @example

* // initialize the noise and start

* const noise = new Tone.Noise("pink").start();

* // make an autofilter to shape the noise

* const autoFilter = new Tone.AutoFilter({

* frequency: "8n",

* baseFrequency: 200,

* octaves: 8

* }).toDestination().start();

* // connect the noise

* noise.connect(autoFilter);

* // start the autofilter LFO

* autoFilter.start();

* @category Source

*/

class Noise extends Source {

constructor() {

super(optionsFromArguments(Noise.getDefaults(), arguments, ["type"]));

this.name = "Noise";

/**

* Private reference to the source

*/

this._source = null;

const options = optionsFromArguments(Noise.getDefaults(), arguments, ["type"]);

this._playbackRate = options.playbackRate;

this.type = options.type;

this._fadeIn = options.fadeIn;

this._fadeOut = options.fadeOut;

}

static getDefaults() {

return Object.assign(Source.getDefaults(), {

fadeIn: 0,

fadeOut: 0,

playbackRate: 1,

type: "white",

});

}

/**

* The type of the noise. Can be "white", "brown", or "pink".

* @example

* const noise = new Tone.Noise().toDestination().start();

* noise.type = "brown";

*/

get type() {

return this._type;

}

set type(type) {

assert(type in _noiseBuffers, "Noise: invalid type: " + type);

if (this._type !== type) {

this._type = type;

// if it's playing, stop and restart it

if (this.state === "started") {

const now = this.now();

this._stop(now);

this._start(now);

}

}

}

/**

* The playback rate of the noise. Affects

* the "frequency" of the noise.

*/

get playbackRate() {

return this._playbackRate;

}

set playbackRate(rate) {

this._playbackRate = rate;

if (this._source) {

this._source.playbackRate.value = rate;

}

}

/**

* internal start method

*/

_start(time) {

const buffer = _noiseBuffers[this._type];

this._source = new ToneBufferSource({

url: buffer,

context: this.context,

fadeIn: this._fadeIn,

fadeOut: this._fadeOut,

loop: true,

onended: () => this.onstop(this),

playbackRate: this._playbackRate,

}).connect(this.output);

this._source.start(this.toSeconds(time), Math.random() * (buffer.duration - 0.001));

}

/**

* internal stop method

*/

_stop(time) {

if (this._source) {

this._source.stop(this.toSeconds(time));

this._source = null;

}

}

/**

* The fadeIn time of the amplitude envelope.

*/

get fadeIn() {

return this._fadeIn;

}

set fadeIn(time) {

this._fadeIn = time;

if (this._source) {

this._source.fadeIn = this._fadeIn;

}

}

/**

* The fadeOut time of the amplitude envelope.

*/

get fadeOut() {

return this._fadeOut;

}

set fadeOut(time) {

this._fadeOut = time;

if (this._source) {

this._source.fadeOut = this._fadeOut;

}

}

_restart(time) {

// TODO could be optimized by cancelling the buffer source 'stop'

this._stop(time);

this._start(time);

}

/**

* Clean up.

*/

dispose() {

super.dispose();

if (this._source) {

this._source.disconnect();

}

return this;

}

}

//--------------------

// THE NOISE BUFFERS

//--------------------

// Noise buffer stats

const BUFFER_LENGTH = 44100 * 5;

const NUM_CHANNELS = 2;

/**

* Cache the noise buffers

*/

const _noiseCache = {

brown: null,

pink: null,

white: null,

};

/**

* The noise arrays. Generated on initialization.

* borrowed heavily from https://github.com/zacharydenton/noise.js

* (c) 2013 Zach Denton (MIT)

*/

const _noiseBuffers = {

get brown() {

if (!_noiseCache.brown) {

const buffer = [];

for (let channelNum = 0; channelNum < NUM_CHANNELS; channelNum++) {

const channel = new Float32Array(BUFFER_LENGTH);

buffer[channelNum] = channel;

let lastOut = 0.0;

for (let i = 0; i < BUFFER_LENGTH; i++) {

const white = Math.random() * 2 - 1;

channel[i] = (lastOut + (0.02 * white)) / 1.02;

lastOut = channel[i];

channel[i] *= 3.5; // (roughly) compensate for gain

}

}

_noiseCache.brown = new ToneAudioBuffer().fromArray(buffer);

}

return _noiseCache.brown;

},

get pink() {

if (!_noiseCache.pink) {

const buffer = [];

for (let channelNum = 0; channelNum < NUM_CHANNELS; channelNum++) {

const channel = new Float32Array(BUFFER_LENGTH);

buffer[channelNum] = channel;

let b0, b1, b2, b3, b4, b5, b6;

b0 = b1 = b2 = b3 = b4 = b5 = b6 = 0.0;

for (let i = 0; i < BUFFER_LENGTH; i++) {

const white = Math.random() * 2 - 1;

b0 = 0.99886 * b0 + white * 0.0555179;

b1 = 0.99332 * b1 + white * 0.0750759;

b2 = 0.96900 * b2 + white * 0.1538520;

b3 = 0.86650 * b3 + white * 0.3104856;

b4 = 0.55000 * b4 + white * 0.5329522;

b5 = -0.7616 * b5 - white * 0.0168980;

channel[i] = b0 + b1 + b2 + b3 + b4 + b5 + b6 + white * 0.5362;

channel[i] *= 0.11; // (roughly) compensate for gain

b6 = white * 0.115926;

}

}

_noiseCache.pink = new ToneAudioBuffer().fromArray(buffer);

}

return _noiseCache.pink;

},

get white() {

if (!_noiseCache.white) {

const buffer = [];

for (let channelNum = 0; channelNum < NUM_CHANNELS; channelNum++) {

const channel = new Float32Array(BUFFER_LENGTH);

buffer[channelNum] = channel;

for (let i = 0; i < BUFFER_LENGTH; i++) {

channel[i] = Math.random() * 2 - 1;

}

}

_noiseCache.white = new ToneAudioBuffer().fromArray(buffer);

}

return _noiseCache.white;

},

};

/**

* UserMedia uses MediaDevices.getUserMedia to open up and external microphone or audio input.

* Check [MediaDevices API Support](https://developer.mozilla.org/en-US/docs/Web/API/MediaDevices/getUserMedia)

* to see which browsers are supported. Access to an external input

* is limited to secure (HTTPS) connections.

* @example

* const meter = new Tone.Meter();

* const mic = new Tone.UserMedia().connect(meter);

* mic.open().then(() => {

* // promise resolves when input is available

* console.log("mic open");

* // print the incoming mic levels in decibels

* setInterval(() => console.log(meter.getValue()), 100);

* }).catch(e => {

* // promise is rejected when the user doesn't have or allow mic access

* console.log("mic not open");

* });

* @category Source

*/

class UserMedia extends ToneAudioNode {

constructor() {

super(optionsFromArguments(UserMedia.getDefaults(), arguments, ["volume"]));

this.name = "UserMedia";

const options = optionsFromArguments(UserMedia.getDefaults(), arguments, ["volume"]);

this._volume = this.output = new Volume({

context: this.context,

volume: options.volume,

});

this.volume = this._volume.volume;

readOnly(this, "volume");

this.mute = options.mute;

}

static getDefaults() {

return Object.assign(ToneAudioNode.getDefaults(), {

mute: false,

volume: 0

});

}

/**

* Open the media stream. If a string is passed in, it is assumed

* to be the label or id of the stream, if a number is passed in,

* it is the input number of the stream.

* @param labelOrId The label or id of the audio input media device.

* With no argument, the default stream is opened.

* @return The promise is resolved when the stream is open.

*/

open(labelOrId) {

return __awaiter(this, void 0, void 0, function* () {

assert(UserMedia.supported, "UserMedia is not supported");

// close the previous stream

if (this.state === "started") {

this.close();

}

const devices = yield UserMedia.enumerateDevices();

if (isNumber(labelOrId)) {

this._device = devices[labelOrId];

}

else {

this._device = devices.find((device) => {

return device.label === labelOrId || device.deviceId === labelOrId;

});

// didn't find a matching device

if (!this._device && devices.length > 0) {

this._device = devices[0];

}

assert(isDefined(this._device), `No matching device ${labelOrId}`);

}

// do getUserMedia

const constraints = {

audio: {

echoCancellation: false,

sampleRate: this.context.sampleRate,

noiseSuppression: false,

mozNoiseSuppression: false,

}

};

if (this._device) {

// @ts-ignore

constraints.audio.deviceId = this._device.deviceId;

}

const stream = yield navigator.mediaDevices.getUserMedia(constraints);

// start a new source only if the previous one is closed

if (!this._stream) {

this._stream = stream;

// Wrap a MediaStreamSourceNode around the live input stream.

const mediaStreamNode = this.context.createMediaStreamSource(stream);

// Connect the MediaStreamSourceNode to a gate gain node

connect(mediaStreamNode, this.output);

this._mediaStream = mediaStreamNode;

}

return this;

});

}

/**

* Close the media stream

*/

close() {

if (this._stream && this._mediaStream) {

this._stream.getAudioTracks().forEach((track) => {

track.stop();

});

this._stream = undefined;

// remove the old media stream

this._mediaStream.disconnect();

this._mediaStream = undefined;

}

this._device = undefined;

return this;

}

/**

* Returns a promise which resolves with the list of audio input devices available.

* @return The promise that is resolved with the devices

* @example

* Tone.UserMedia.enumerateDevices().then((devices) => {

* // print the device labels

* console.log(devices.map(device => device.label));

* });

*/

static enumerateDevices() {

return __awaiter(this, void 0, void 0, function* () {

const allDevices = yield navigator.mediaDevices.enumerateDevices();

return allDevices.filter(device => {

return device.kind === "audioinput";

});

});

}

/**

* Returns the playback state of the source, "started" when the microphone is open

* and "stopped" when the mic is closed.

*/

get state() {

return this._stream && this._stream.active ? "started" : "stopped";

}

/**

* Returns an identifier for the represented device that is

* persisted across sessions. It is un-guessable by other applications and

* unique to the origin of the calling application. It is reset when the

* user clears cookies (for Private Browsing, a different identifier is

* used that is not persisted across sessions). Returns undefined when the

* device is not open.

*/

get deviceId() {

if (this._device) {

return this._device.deviceId;

}

else {

return undefined;

}

}

/**

* Returns a group identifier. Two devices have the

* same group identifier if they belong to the same physical device.

* Returns null when the device is not open.

*/

get groupId() {

if (this._device) {

return this._device.groupId;

}

else {

return undefined;

}

}

/**

* Returns a label describing this device (for example "Built-in Microphone").

* Returns undefined when the device is not open or label is not available

* because of permissions.

*/

get label() {

if (this._device) {

return this._device.label;

}

else {

return undefined;

}

}

/**

* Mute the output.

* @example

* const mic = new Tone.UserMedia();

* mic.open().then(() => {

* // promise resolves when input is available

* });

* // mute the output

* mic.mute = true;

*/

get mute() {

return this._volume.mute;

}

set mute(mute) {

this._volume.mute = mute;

}

dispose() {

super.dispose();

this.close();

this._volume.dispose();

this.volume.dispose();

return this;

}

/**

* If getUserMedia is supported by the browser.

*/

static get supported() {

return isDefined(navigator.mediaDevices) &&

isDefined(navigator.mediaDevices.getUserMedia);

}

}

/**

* Add a signal and a number or two signals. When no value is

* passed into the constructor, Tone.Add will sum input and `addend`

* If a value is passed into the constructor, the it will be added to the input.

*

* @example

* return Tone.Offline(() => {

* const add = new Tone.Add(2).toDestination();

* add.addend.setValueAtTime(1, 0.2);

* const signal = new Tone.Signal(2);

* // add a signal and a scalar

* signal.connect(add);

* signal.setValueAtTime(1, 0.1);

* }, 0.5, 1);

* @category Signal

*/

class Add extends Signal {

constructor() {

super(Object.assign(optionsFromArguments(Add.getDefaults(), arguments, ["value"])));

this.override = false;

this.name = "Add";

/**

* the summing node

*/

this._sum = new Gain({ context: this.context });

this.input = this._sum;

this.output = this._sum;

/**

* The value which is added to the input signal

*/

this.addend = this._param;

connectSeries(this._constantSource, this._sum);

}

static getDefaults() {

return Object.assign(Signal.getDefaults(), {

value: 0,

});

}

dispose() {

super.dispose();

this._sum.dispose();

return this;

}

}

/**

* Performs a linear scaling on an input signal.

* Scales a NormalRange input to between

* outputMin and outputMax.

*

* @example

* const scale = new Tone.Scale(50, 100);

* const signal = new Tone.Signal(0.5).connect(scale);

* // the output of scale equals 75

* @category Signal

*/

class Scale extends SignalOperator {

constructor() {

super(Object.assign(optionsFromArguments(Scale.getDefaults(), arguments, ["min", "max"])));

this.name = "Scale";

const options = optionsFromArguments(Scale.getDefaults(), arguments, ["min", "max"]);

this._mult = this.input = new Multiply({

context: this.context,

value: options.max - options.min,

});

this._add = this.output = new Add({

context: this.context,

value: options.min,

});

this._min = options.min;

this._max = options.max;

this.input.connect(this.output);

}

static getDefaults() {

return Object.assign(SignalOperator.getDefaults(), {

max: 1,

min: 0,

});

}

/**

* The minimum output value. This number is output when the value input value is 0.

*/

get min() {

return this._min;

}

set min(min) {

this._min = min;

this._setRange();

}

/**

* The maximum output value. This number is output when the value input value is 1.

*/

get max() {

return this._max;

}

set max(max) {

this._max = max;

this._setRange();

}

/**

* set the values

*/

_setRange() {

this._add.value = this._min;

this._mult.value = this._max - this._min;

}

dispose() {

super.dispose();

this._add.dispose();

this._mult.dispose();

return this;

}

}

/**

* Tone.Zero outputs 0's at audio-rate. The reason this has to be

* it's own class is that many browsers optimize out Tone.Signal

* with a value of 0 and will not process nodes further down the graph.

* @category Signal

*/

class Zero extends SignalOperator {

constructor() {

super(Object.assign(optionsFromArguments(Zero.getDefaults(), arguments)));

this.name = "Zero";

/**

* The gain node which connects the constant source to the output

*/

this._gain = new Gain({ context: this.context });

/**

* Only outputs 0

*/

this.output = this._gain;

/**

* no input node

*/

this.input = undefined;

connect(this.context.getConstant(0), this._gain);

}

/**

* clean up

*/

dispose() {

super.dispose();

disconnect(this.context.getConstant(0), this._gain);

return this;

}

}

/**

* LFO stands for low frequency oscillator. LFO produces an output signal

* which can be attached to an AudioParam or Tone.Signal

* in order to modulate that parameter with an oscillator. The LFO can

* also be synced to the transport to start/stop and change when the tempo changes.

* @example

* return Tone.Offline(() => {

* const lfo = new Tone.LFO("4n", 400, 4000).start().toDestination();

* }, 0.5, 1);

* @category Source

*/

class LFO extends ToneAudioNode {

constructor() {

super(optionsFromArguments(LFO.getDefaults(), arguments, ["frequency", "min", "max"]));

this.name = "LFO";

/**

* The value that the LFO outputs when it's stopped

*/

this._stoppedValue = 0;

/**

* A private placeholder for the units

*/

this._units = "number";

/**

* If the input value is converted using the [[units]]

*/

this.convert = true;

/**

* Private methods borrowed from Param

*/

// @ts-ignore

this._fromType = Param.prototype._fromType;

// @ts-ignore

this._toType = Param.prototype._toType;

// @ts-ignore

this._is = Param.prototype._is;

// @ts-ignore

this._clampValue = Param.prototype._clampValue;

const options = optionsFromArguments(LFO.getDefaults(), arguments, ["frequency", "min", "max"]);

this._oscillator = new Oscillator(options);

this.frequency = this._oscillator.frequency;

this._amplitudeGain = new Gain({

context: this.context,

gain: options.amplitude,

units: "normalRange",

});

this.amplitude = this._amplitudeGain.gain;

this._stoppedSignal = new Signal({

context: this.context,

units: "audioRange",

value: 0,

});

this._zeros = new Zero({ context: this.context });

this._a2g = new AudioToGain({ context: this.context });

this._scaler = this.output = new Scale({

context: this.context,

max: options.max,

min: options.min,

});

this.units = options.units;

this.min = options.min;

this.max = options.max;

// connect it up

this._oscillator.chain(this._amplitudeGain, this._a2g, this._scaler);

this._zeros.connect(this._a2g);

this._stoppedSignal.connect(this._a2g);

readOnly(this, ["amplitude", "frequency"]);

this.phase = options.phase;

}

static getDefaults() {

return Object.assign(Oscillator.getDefaults(), {

amplitude: 1,

frequency: "4n",

max: 1,

min: 0,

type: "sine",

units: "number",

});

}

/**

* Start the LFO.

* @param time The time the LFO will start

*/

start(time) {

time = this.toSeconds(time);

this._stoppedSignal.setValueAtTime(0, time);

this._oscillator.start(time);

return this;

}

/**

* Stop the LFO.

* @param time The time the LFO will stop

*/

stop(time) {

time = this.toSeconds(time);

this._stoppedSignal.setValueAtTime(this._stoppedValue, time);

this._oscillator.stop(time);

return this;

}

/**

* Sync the start/stop/pause to the transport

* and the frequency to the bpm of the transport

* @example

* const lfo = new Tone.LFO("8n");

* lfo.sync().start(0);

* // the rate of the LFO will always be an eighth note, even as the tempo changes

*/

sync() {

this._oscillator.sync();

this._oscillator.syncFrequency();

return this;

}

/**

* unsync the LFO from transport control

*/

unsync() {

this._oscillator.unsync();

this._oscillator.unsyncFrequency();

return this;

}

/**

* After the oscillator waveform is updated, reset the `_stoppedSignal` value to match the updated waveform

*/

_setStoppedValue() {

this._stoppedValue = this._oscillator.getInitialValue();

this._stoppedSignal.value = this._stoppedValue;

}

/**

* The minimum output of the LFO.

*/

get min() {

return this._toType(this._scaler.min);

}

set min(min) {

min = this._fromType(min);

this._scaler.min = min;

}

/**

* The maximum output of the LFO.

*/

get max() {

return this._toType(this._scaler.max);

}

set max(max) {

max = this._fromType(max);

this._scaler.max = max;

}

/**

* The type of the oscillator: See [[Oscillator.type]]

*/

get type() {

return this._oscillator.type;

}

set type(type) {

this._oscillator.type = type;

this._setStoppedValue();

}

/**

* The oscillator's partials array: See [[Oscillator.partials]]

*/

get partials() {

return this._oscillator.partials;

}

set partials(partials) {

this._oscillator.partials = partials;

this._setStoppedValue();

}

/**

* The phase of the LFO.

*/

get phase() {

return this._oscillator.phase;

}

set phase(phase) {

this._oscillator.phase = phase;

this._setStoppedValue();

}

/**

* The output units of the LFO.

*/

get units() {

return this._units;

}

set units(val) {

const currentMin = this.min;

const currentMax = this.max;

// convert the min and the max

this._units = val;

this.min = currentMin;

this.max = currentMax;

}

/**

* Returns the playback state of the source, either "started" or "stopped".

*/

get state() {

return this._oscillator.state;

}

/**

* @param node the destination to connect to

* @param outputNum the optional output number

* @param inputNum the input number

*/

connect(node, outputNum, inputNum) {

if (node instanceof Param || node instanceof Signal) {

this.convert = node.convert;

this.units = node.units;

}

connectSignal(this, node, outputNum, inputNum);

return this;

}

dispose() {

super.dispose();

this._oscillator.dispose();

this._stoppedSignal.dispose();

this._zeros.dispose();

this._scaler.dispose();

this._a2g.dispose();

this._amplitudeGain.dispose();

this.amplitude.dispose();

return this;

}

}

/**

* Players combines multiple [[Player]] objects.

* @category Source

*/

class Players extends ToneAudioNode {

constructor() {

super(optionsFromArguments(Players.getDefaults(), arguments, ["urls", "onload"], "urls"));

this.name = "Players";

/**

* Players has no input.

*/

this.input = undefined;

/**

* The container of all of the players

*/

this._players = new Map();

const options = optionsFromArguments(Players.getDefaults(), arguments, ["urls", "onload"], "urls");

/**

* The output volume node

*/

this._volume = this.output = new Volume({

context: this.context,

volume: options.volume,

});

this.volume = this._volume.volume;

readOnly(this, "volume");

this._buffers = new ToneAudioBuffers({

urls: options.urls,

onload: options.onload,

baseUrl: options.baseUrl,

onerror: options.onerror

});

// mute initially

this.mute = options.mute;

this._fadeIn = options.fadeIn;

this._fadeOut = options.fadeOut;

}

static getDefaults() {

return Object.assign(Source.getDefaults(), {

baseUrl: "",

fadeIn: 0,

fadeOut: 0,

mute: false,

onload: noOp,

onerror: noOp,

urls: {},

volume: 0,

});

}

/**

* Mute the output.

*/

get mute() {

return this._volume.mute;

}

set mute(mute) {

this._volume.mute = mute;

}

/**

* The fadeIn time of the envelope applied to the source.

*/

get fadeIn() {

return this._fadeIn;

}

set fadeIn(fadeIn) {

this._fadeIn = fadeIn;

this._players.forEach(player => {

player.fadeIn = fadeIn;

});

}

/**

* The fadeOut time of the each of the sources.

*/

get fadeOut() {

return this._fadeOut;

}

set fadeOut(fadeOut) {

this._fadeOut = fadeOut;

this._players.forEach(player => {

player.fadeOut = fadeOut;

});

}

/**

* The state of the players object. Returns "started" if any of the players are playing.

*/

get state() {

const playing = Array.from(this._players).some(([_, player]) => player.state === "started");

return playing ? "started" : "stopped";

}

/**

* True if the buffers object has a buffer by that name.

* @param name The key or index of the buffer.

*/

has(name) {

return this._buffers.has(name);

}

/**

* Get a player by name.

* @param name The players name as defined in the constructor object or `add` method.

*/

player(name) {

assert(this.has(name), `No Player with the name ${name} exists on this object`);

if (!this._players.has(name)) {

const player = new Player({

context: this.context,

fadeIn: this._fadeIn,

fadeOut: this._fadeOut,

url: this._buffers.get(name),

}).connect(this.output);

this._players.set(name, player);

}

return this._players.get(name);

}

/**

* If all the buffers are loaded or not

*/

get loaded() {

return this._buffers.loaded;

}

/**

* Add a player by name and url to the Players

* @param name A unique name to give the player

* @param url Either the url of the bufer or a buffer which will be added with the given name.

* @param callback The callback to invoke when the url is loaded.

*/

add(name, url, callback) {

assert(!this._buffers.has(name), "A buffer with that name already exists on this object");

this._buffers.add(name, url, callback);

return this;

}

/**

* Stop all of the players at the given time

* @param time The time to stop all of the players.

*/

stopAll(time) {

this._players.forEach(player => player.stop(time));

return this;

}

dispose() {

super.dispose();

this._volume.dispose();

this.volume.dispose();

this._players.forEach(player => player.dispose());

this._buffers.dispose();

return this;

}

}

/**

* GrainPlayer implements [granular synthesis](https://en.wikipedia.org/wiki/Granular_synthesis).

* Granular Synthesis enables you to adjust pitch and playback rate independently. The grainSize is the

* amount of time each small chunk of audio is played for and the overlap is the

* amount of crossfading transition time between successive grains.

* @category Source

*/

class GrainPlayer extends Source {

constructor() {

super(optionsFromArguments(GrainPlayer.getDefaults(), arguments, ["url", "onload"]));

this.name = "GrainPlayer";

/**

* Internal loopStart value

*/

this._loopStart = 0;

/**

* Internal loopStart value

*/

this._loopEnd = 0;

/**

* All of the currently playing BufferSources

*/

this._activeSources = [];

const options = optionsFromArguments(GrainPlayer.getDefaults(), arguments, ["url", "onload"]);

this.buffer = new ToneAudioBuffer({

onload: options.onload,

onerror: options.onerror,

reverse: options.reverse,

url: options.url,

});

this._clock = new Clock({

context: this.context,

callback: this._tick.bind(this),

frequency: 1 / options.grainSize

});

this._playbackRate = options.playbackRate;

this._grainSize = options.grainSize;

this._overlap = options.overlap;

this.detune = options.detune;

// setup

this.overlap = options.overlap;

this.loop = options.loop;

this.playbackRate = options.playbackRate;

this.grainSize = options.grainSize;

this.loopStart = options.loopStart;

this.loopEnd = options.loopEnd;

this.reverse = options.reverse;

this._clock.on("stop", this._onstop.bind(this));

}

static getDefaults() {

return Object.assign(Source.getDefaults(), {

onload: noOp,

onerror: noOp,

overlap: 0.1,

grainSize: 0.2,

playbackRate: 1,

detune: 0,

loop: false,

loopStart: 0,

loopEnd: 0,

reverse: false

});

}

/**

* Internal start method

*/

_start(time, offset, duration) {

offset = defaultArg(offset, 0);

offset = this.toSeconds(offset);

time = this.toSeconds(time);

const grainSize = 1 / this._clock.frequency.getValueAtTime(time);

this._clock.start(time, offset / grainSize);

if (duration) {

this.stop(time + this.toSeconds(duration));

}

}

/**

* Stop and then restart the player from the beginning (or offset)

* @param time When the player should start.

* @param offset The offset from the beginning of the sample to start at.

* @param duration How long the sample should play. If no duration is given,

* it will default to the full length of the sample (minus any offset)

*/

restart(time, offset, duration) {

super.restart(time, offset, duration);

return this;

}

_restart(time, offset, duration) {

this._stop(time);

this._start(time, offset, duration);

}

/**

* Internal stop method

*/

_stop(time) {

this._clock.stop(time);

}

/**

* Invoked when the clock is stopped

*/

_onstop(time) {

// stop the players

this._activeSources.forEach((source) => {

source.fadeOut = 0;

source.stop(time);

});

this.onstop(this);

}

/**

* Invoked on each clock tick. scheduled a new grain at this time.

*/

_tick(time) {

// check if it should stop looping

const ticks = this._clock.getTicksAtTime(time);

const offset = ticks * this._grainSize;

this.log("offset", offset);

if (!this.loop && offset > this.buffer.duration) {

this.stop(time);

return;

}

// at the beginning of the file, the fade in should be 0

const fadeIn = offset < this._overlap ? 0 : this._overlap;

// create a buffer source

const source = new ToneBufferSource({

context: this.context,

url: this.buffer,

fadeIn: fadeIn,

fadeOut: this._overlap,

loop: this.loop,

loopStart: this._loopStart,

loopEnd: this._loopEnd,

// compute the playbackRate based on the detune

playbackRate: intervalToFrequencyRatio(this.detune / 100)

}).connect(this.output);

source.start(time, this._grainSize * ticks);

source.stop(time + this._grainSize / this.playbackRate);

// add it to the active sources

this._activeSources.push(source);

// remove it when it's done

source.onended = () => {

const index = this._activeSources.indexOf(source);

if (index !== -1) {

this._activeSources.splice(index, 1);

}

};

}

/**

* The playback rate of the sample

*/

get playbackRate() {

return this._playbackRate;

}

set playbackRate(rate) {

assertRange(rate, 0.001);

this._playbackRate = rate;

this.grainSize = this._grainSize;

}

/**

* The loop start time.

*/

get loopStart() {

return this._loopStart;

}

set loopStart(time) {

if (this.buffer.loaded) {

assertRange(this.toSeconds(time), 0, this.buffer.duration);

}

this._loopStart = this.toSeconds(time);

}

/**

* The loop end time.

*/

get loopEnd() {

return this._loopEnd;

}

set loopEnd(time) {

if (this.buffer.loaded) {

assertRange(this.toSeconds(time), 0, this.buffer.duration);

}

this._loopEnd = this.toSeconds(time);

}

/**

* The direction the buffer should play in

*/

get reverse() {

return this.buffer.reverse;

}

set reverse(rev) {

this.buffer.reverse = rev;

}

/**

* The size of each chunk of audio that the

* buffer is chopped into and played back at.

*/

get grainSize() {

return this._grainSize;

}

set grainSize(size) {

this._grainSize = this.toSeconds(size);

this._clock.frequency.setValueAtTime(this._playbackRate / this._grainSize, this.now());

}

/**

* The duration of the cross-fade between successive grains.

*/

get overlap() {

return this._overlap;

}

set overlap(time) {

const computedTime = this.toSeconds(time);

assertRange(computedTime, 0);

this._overlap = computedTime;

}

/**

* If all the buffer is loaded

*/

get loaded() {

return this.buffer.loaded;

}

dispose() {

super.dispose();

this.buffer.dispose();

this._clock.dispose();

this._activeSources.forEach((source) => source.dispose());

return this;

}

}

/**

* Return the absolute value of an incoming signal.

*

* @example

* return Tone.Offline(() => {

* const abs = new Tone.Abs().toDestination();

* const signal = new Tone.Signal(1);

* signal.rampTo(-1, 0.5);

* signal.connect(abs);

* }, 0.5, 1);

* @category Signal

*/

class Abs extends SignalOperator {

constructor() {

super(...arguments);

this.name = "Abs";

/**

* The node which converts the audio ranges

*/

this._abs = new WaveShaper({

context: this.context,

mapping: val => {

if (Math.abs(val) < 0.001) {

return 0;

}

else {

return Math.abs(val);

}

},

});

/**

* The AudioRange input [-1, 1]

*/

this.input = this._abs;

/**

* The output range [0, 1]

*/

this.output = this._abs;

}

/**

* clean up

*/

dispose() {

super.dispose();

this._abs.dispose();

return this;

}

}

/**

* GainToAudio converts an input in NormalRange [0,1] to AudioRange [-1,1].

* See [[AudioToGain]].

* @category Signal

*/

class GainToAudio extends SignalOperator {

constructor() {

super(...arguments);

this.name = "GainToAudio";

/**

* The node which converts the audio ranges

*/

this._norm = new WaveShaper({

context: this.context,

mapping: x => Math.abs(x) * 2 - 1,

});

/**

* The NormalRange input [0, 1]

*/

this.input = this._norm;

/**

* The AudioRange output [-1, 1]

*/

this.output = this._norm;

}

/**

* clean up

*/

dispose() {

super.dispose();

this._norm.dispose();

return this;

}

}

/**

* Negate the incoming signal. i.e. an input signal of 10 will output -10

*

* @example

* const neg = new Tone.Negate();

* const sig = new Tone.Signal(-2).connect(neg);

* // output of neg is positive 2.

* @category Signal

*/

class Negate extends SignalOperator {

constructor() {

super(...arguments);

this.name = "Negate";

/**

* negation is done by multiplying by -1

*/

this._multiply = new Multiply({

context: this.context,

value: -1,

});

/**

* The input and output are equal to the multiply node

*/

this.input = this._multiply;

this.output = this._multiply;

}

/**

* clean up

* @returns {Negate} this

*/

dispose() {

super.dispose();

this._multiply.dispose();

return this;

}

}

/**

* Subtract the signal connected to the input is subtracted from the signal connected

* The subtrahend.

*

* @example

* // subtract a scalar from a signal

* const sub = new Tone.Subtract(1);

* const sig = new Tone.Signal(4).connect(sub);

* // the output of sub is 3.

* @example

* // subtract two signals

* const sub = new Tone.Subtract();

* const sigA = new Tone.Signal(10);

* const sigB = new Tone.Signal(2.5);

* sigA.connect(sub);

* sigB.connect(sub.subtrahend);

* // output of sub is 7.5

* @category Signal

*/

class Subtract extends Signal {

constructor() {

super(Object.assign(optionsFromArguments(Subtract.getDefaults(), arguments, ["value"])));

this.override = false;

this.name = "Subtract";

/**

* the summing node

*/

this._sum = new Gain({ context: this.context });

this.input = this._sum;

this.output = this._sum;

/**

* Negate the input of the second input before connecting it to the summing node.

*/

this._neg = new Negate({ context: this.context });

/**

* The value which is subtracted from the main signal

*/

this.subtrahend = this._param;

connectSeries(this._constantSource, this._neg, this._sum);

}

static getDefaults() {

return Object.assign(Signal.getDefaults(), {

value: 0,

});

}

dispose() {

super.dispose();

this._neg.dispose();

this._sum.dispose();

return this;

}

}

/**

* GreaterThanZero outputs 1 when the input is strictly greater than zero

* @example

* return Tone.Offline(() => {

* const gt0 = new Tone.GreaterThanZero().toDestination();

* const sig = new Tone.Signal(0.5).connect(gt0);

* sig.setValueAtTime(-1, 0.05);

* }, 0.1, 1);

* @category Signal

*/

class GreaterThanZero extends SignalOperator {

constructor() {

super(Object.assign(optionsFromArguments(GreaterThanZero.getDefaults(), arguments)));

this.name = "GreaterThanZero";

this._thresh = this.output = new WaveShaper({

context: this.context,

length: 127,

mapping: (val) => {

if (val <= 0) {

return 0;

}

else {

return 1;

}

},

});

this._scale = this.input = new Multiply({

context: this.context,

value: 10000

});

// connections

this._scale.connect(this._thresh);

}

dispose() {

super.dispose();

this._scale.dispose();

this._thresh.dispose();

return this;

}

}

/**

* Output 1 if the signal is greater than the value, otherwise outputs 0.

* can compare two signals or a signal and a number.

*

* @example

* return Tone.Offline(() => {

* const gt = new Tone.GreaterThan(2).toDestination();

* const sig = new Tone.Signal(4).connect(gt);

* }, 0.1, 1);

* @category Signal

*/

class GreaterThan extends Signal {

constructor() {

super(Object.assign(optionsFromArguments(GreaterThan.getDefaults(), arguments, ["value"])));

this.name = "GreaterThan";

this.override = false;

const options = optionsFromArguments(GreaterThan.getDefaults(), arguments, ["value"]);

this._subtract = this.input = new Subtract({

context: this.context,

value: options.value

});

this._gtz = this.output = new GreaterThanZero({ context: this.context });

this.comparator = this._param = this._subtract.subtrahend;

readOnly(this, "comparator");

// connect

this._subtract.connect(this._gtz);

}

static getDefaults() {

return Object.assign(Signal.getDefaults(), {

value: 0,

});

}

dispose() {

super.dispose();

this._gtz.dispose();

this._subtract.dispose();

this.comparator.dispose();

return this;

}

}

/**

* Pow applies an exponent to the incoming signal. The incoming signal must be AudioRange [-1, 1]

*

* @example

* const pow = new Tone.Pow(2);

* const sig = new Tone.Signal(0.5).connect(pow);

* // output of pow is 0.25.

* @category Signal

*/

class Pow extends SignalOperator {

constructor() {

super(Object.assign(optionsFromArguments(Pow.getDefaults(), arguments, ["value"])));

this.name = "Pow";

const options = optionsFromArguments(Pow.getDefaults(), arguments, ["value"]);

this._exponentScaler = this.input = this.output = new WaveShaper({

context: this.context,

mapping: this._expFunc(options.value),

length: 8192,

});

this._exponent = options.value;

}

static getDefaults() {

return Object.assign(SignalOperator.getDefaults(), {

value: 1,

});

}

/**

* the function which maps the waveshaper

* @param exponent exponent value

*/

_expFunc(exponent) {

return (val) => {

return Math.pow(Math.abs(val), exponent);

};

}

/**

* The value of the exponent.

*/

get value() {

return this._exponent;

}

set value(exponent) {

this._exponent = exponent;

this._exponentScaler.setMap(this._expFunc(this._exponent));

}

/**

* Clean up.

*/

dispose() {

super.dispose();

this._exponentScaler.dispose();

return this;

}

}

/**

* Performs an exponential scaling on an input signal.

* Scales a NormalRange value [0,1] exponentially

* to the output range of outputMin to outputMax.

* @example

* const scaleExp = new Tone.ScaleExp(0, 100, 2);

* const signal = new Tone.Signal(0.5).connect(scaleExp);

* @category Signal

*/

class ScaleExp extends Scale {

constructor() {

super(Object.assign(optionsFromArguments(ScaleExp.getDefaults(), arguments, ["min", "max", "exponent"])));

this.name = "ScaleExp";

const options = optionsFromArguments(ScaleExp.getDefaults(), arguments, ["min", "max", "exponent"]);

this.input = this._exp = new Pow({

context: this.context,

value: options.exponent,

});

this._exp.connect(this._mult);

}

static getDefaults() {

return Object.assign(Scale.getDefaults(), {

exponent: 1,

});

}

/**

* Instead of interpolating linearly between the [[min]] and

* [[max]] values, setting the exponent will interpolate between

* the two values with an exponential curve.

*/

get exponent() {

return this._exp.value;

}

set exponent(exp) {

this._exp.value = exp;

}

dispose() {

super.dispose();

this._exp.dispose();

return this;

}

}

/**

* Adds the ability to synchronize the signal to the [[Transport]]

*/

class SyncedSignal extends Signal {

constructor() {

super(optionsFromArguments(Signal.getDefaults(), arguments, ["value", "units"]));

this.name = "SyncedSignal";

/**

* Don't override when something is connected to the input

*/

this.override = false;

const options = optionsFromArguments(Signal.getDefaults(), arguments, ["value", "units"]);

this._lastVal = options.value;

this._synced = this.context.transport.scheduleRepeat(this._onTick.bind(this), "1i");

this._syncedCallback = this._anchorValue.bind(this);

this.context.transport.on("start", this._syncedCallback);

this.context.transport.on("pause", this._syncedCallback);

this.context.transport.on("stop", this._syncedCallback);

// disconnect the constant source from the output and replace it with another one

this._constantSource.disconnect();

this._constantSource.stop(0);

// create a new one

this._constantSource = this.output = new ToneConstantSource({

context: this.context,

offset: options.value,

units: options.units,

}).start(0);

this.setValueAtTime(options.value, 0);

}

/**

* Callback which is invoked every tick.

*/

_onTick(time) {

const val = super.getValueAtTime(this.context.transport.seconds);

// approximate ramp curves with linear ramps

if (this._lastVal !== val) {

this._lastVal = val;

this._constantSource.offset.setValueAtTime(val, time);

}

}

/**

* Anchor the value at the start and stop of the Transport

*/

_anchorValue(time) {

const val = super.getValueAtTime(this.context.transport.seconds);

this._lastVal = val;

this._constantSource.offset.cancelAndHoldAtTime(time);

this._constantSource.offset.setValueAtTime(val, time);

}

getValueAtTime(time) {

const computedTime = new TransportTimeClass(this.context, time).toSeconds();

return super.getValueAtTime(computedTime);

}

setValueAtTime(value, time) {

const computedTime = new TransportTimeClass(this.context, time).toSeconds();

super.setValueAtTime(value, computedTime);

return this;

}

linearRampToValueAtTime(value, time) {

const computedTime = new TransportTimeClass(this.context, time).toSeconds();

super.linearRampToValueAtTime(value, computedTime);

return this;

}

exponentialRampToValueAtTime(value, time) {

const computedTime = new TransportTimeClass(this.context, time).toSeconds();

super.exponentialRampToValueAtTime(value, computedTime);

return this;

}

setTargetAtTime(value, startTime, timeConstant) {

const computedTime = new TransportTimeClass(this.context, startTime).toSeconds();

super.setTargetAtTime(value, computedTime, timeConstant);

return this;

}

cancelScheduledValues(startTime) {

const computedTime = new TransportTimeClass(this.context, startTime).toSeconds();

super.cancelScheduledValues(computedTime);

return this;

}

setValueCurveAtTime(values, startTime, duration, scaling) {

const computedTime = new TransportTimeClass(this.context, startTime).toSeconds();

duration = this.toSeconds(duration);

super.setValueCurveAtTime(values, computedTime, duration, scaling);

return this;

}

cancelAndHoldAtTime(time) {

const computedTime = new TransportTimeClass(this.context, time).toSeconds();

super.cancelAndHoldAtTime(computedTime);

return this;

}

setRampPoint(time) {

const computedTime = new TransportTimeClass(this.context, time).toSeconds();

super.setRampPoint(computedTime);

return this;

}

exponentialRampTo(value, rampTime, startTime) {

const computedTime = new TransportTimeClass(this.context, startTime).toSeconds();

super.exponentialRampTo(value, rampTime, computedTime);

return this;

}

linearRampTo(value, rampTime, startTime) {

const computedTime = new TransportTimeClass(this.context, startTime).toSeconds();

super.linearRampTo(value, rampTime, computedTime);

return this;

}

targetRampTo(value, rampTime, startTime) {

const computedTime = new TransportTimeClass(this.context, startTime).toSeconds();

super.targetRampTo(value, rampTime, computedTime);

return this;

}

dispose() {

super.dispose();

this.context.transport.clear(this._synced);

this.context.transport.off("start", this._syncedCallback);

this.context.transport.off("pause", this._syncedCallback);

this.context.transport.off("stop", this._syncedCallback);

this._constantSource.dispose();

return this;

}

}

/**

* Base class for both AM and FM synths

*/

class ModulationSynth extends Monophonic {

constructor() {

super(optionsFromArguments(ModulationSynth.getDefaults(), arguments));

this.name = "ModulationSynth";

const options = optionsFromArguments(ModulationSynth.getDefaults(), arguments);

this._carrier = new Synth({

context: this.context,

oscillator: options.oscillator,

envelope: options.envelope,

onsilence: () => this.onsilence(this),

volume: -10,

});

this._modulator = new Synth({

context: this.context,

oscillator: options.modulation,

envelope: options.modulationEnvelope,

volume: -10,

});

this.oscillator = this._carrier.oscillator;

this.envelope = this._carrier.envelope;

this.modulation = this._modulator.oscillator;

this.modulationEnvelope = this._modulator.envelope;

this.frequency = new Signal({

context: this.context,

units: "frequency",

});

this.detune = new Signal({

context: this.context,

value: options.detune,

units: "cents"

});

this.harmonicity = new Multiply({

context: this.context,

value: options.harmonicity,

minValue: 0,

});

this._modulationNode = new Gain({

context: this.context,

gain: 0,

});

readOnly(this, ["frequency", "harmonicity", "oscillator", "envelope", "modulation", "modulationEnvelope", "detune"]);

}

static getDefaults() {

return Object.assign(Monophonic.getDefaults(), {

harmonicity: 3,

oscillator: Object.assign(omitFromObject(OmniOscillator.getDefaults(), [

...Object.keys(Source.getDefaults()),

"frequency",

"detune"

]), {

type: "sine"

}),

envelope: Object.assign(omitFromObject(Envelope.getDefaults(), Object.keys(ToneAudioNode.getDefaults())), {

attack: 0.01,

decay: 0.01,

sustain: 1,

release: 0.5

}),

modulation: Object.assign(omitFromObject(OmniOscillator.getDefaults(), [

...Object.keys(Source.getDefaults()),

"frequency",

"detune"

]), {

type: "square"

}),

modulationEnvelope: Object.assign(omitFromObject(Envelope.getDefaults(), Object.keys(ToneAudioNode.getDefaults())), {

attack: 0.5,

decay: 0.0,

sustain: 1,

release: 0.5

})

});

}

/**

* Trigger the attack portion of the note

*/

_triggerEnvelopeAttack(time, velocity) {

// @ts-ignore

this._carrier._triggerEnvelopeAttack(time, velocity);

// @ts-ignore

this._modulator._triggerEnvelopeAttack(time, velocity);

}

/**

* Trigger the release portion of the note

*/

_triggerEnvelopeRelease(time) {

// @ts-ignore

this._carrier._triggerEnvelopeRelease(time);

// @ts-ignore

this._modulator._triggerEnvelopeRelease(time);

return this;

}

getLevelAtTime(time) {

time = this.toSeconds(time);

return this.envelope.getValueAtTime(time);

}

dispose() {

super.dispose();

this._carrier.dispose();

this._modulator.dispose();

this.frequency.dispose();

this.detune.dispose();

this.harmonicity.dispose();

this._modulationNode.dispose();

return this;

}

}

/**

* AMSynth uses the output of one Tone.Synth to modulate the

* amplitude of another Tone.Synth. The harmonicity (the ratio between

* the two signals) affects the timbre of the output signal greatly.

* Read more about Amplitude Modulation Synthesis on

* [SoundOnSound](https://web.archive.org/web/20160404103653/http://www.soundonsound.com:80/sos/mar00/articles/synthsecrets.htm).

*

* @example

* const synth = new Tone.AMSynth().toDestination();

* synth.triggerAttackRelease("C4", "4n");

*

* @category Instrument

*/

class AMSynth extends ModulationSynth {

constructor() {

super(optionsFromArguments(AMSynth.getDefaults(), arguments));

this.name = "AMSynth";

this._modulationScale = new AudioToGain({

context: this.context,

});

// control the two voices frequency

this.frequency.connect(this._carrier.frequency);

this.frequency.chain(this.harmonicity, this._modulator.frequency);

this.detune.fan(this._carrier.detune, this._modulator.detune);

this._modulator.chain(this._modulationScale, this._modulationNode.gain);

this._carrier.chain(this._modulationNode, this.output);

}

dispose() {

super.dispose();

this._modulationScale.dispose();

return this;

}

}

/**

* Thin wrapper around the native Web Audio [BiquadFilterNode](https://webaudio.github.io/web-audio-api/#biquadfilternode).

* BiquadFilter is similar to [[Filter]] but doesn't have the option to set the "rolloff" value.

* @category Component

*/

class BiquadFilter extends ToneAudioNode {

constructor() {

super(optionsFromArguments(BiquadFilter.getDefaults(), arguments, ["frequency", "type"]));

this.name = "BiquadFilter";

const options = optionsFromArguments(BiquadFilter.getDefaults(), arguments, ["frequency", "type"]);

this._filter = this.context.createBiquadFilter();

this.input = this.output = this._filter;

this.Q = new Param({

context: this.context,

units: "number",

value: options.Q,

param: this._filter.Q,

});

this.frequency = new Param({

context: this.context,

units: "frequency",

value: options.frequency,

param: this._filter.frequency,

});

this.detune = new Param({

context: this.context,

units: "cents",

value: options.detune,

param: this._filter.detune,

});

this.gain = new Param({

context: this.context,

units: "decibels",

convert: false,

value: options.gain,

param: this._filter.gain,

});

this.type = options.type;

}

static getDefaults() {

return Object.assign(ToneAudioNode.getDefaults(), {

Q: 1,

type: "lowpass",

frequency: 350,

detune: 0,

gain: 0,

});

}

/**

* The type of this BiquadFilterNode. For a complete list of types and their attributes, see the

* [Web Audio API](https://webaudio.github.io/web-audio-api/#dom-biquadfiltertype-lowpass)

*/

get type() {

return this._filter.type;

}

set type(type) {

const types = ["lowpass", "highpass", "bandpass",

"lowshelf", "highshelf", "notch", "allpass", "peaking"];

assert(types.indexOf(type) !== -1, `Invalid filter type: ${type}`);

this._filter.type = type;

}

/**

* Get the frequency response curve. This curve represents how the filter

* responses to frequencies between 20hz-20khz.

* @param len The number of values to return

* @return The frequency response curve between 20-20kHz

*/

getFrequencyResponse(len = 128) {

// start with all 1s

const freqValues = new Float32Array(len);

for (let i = 0; i < len; i++) {

const norm = Math.pow(i / len, 2);

const freq = norm * (20000 - 20) + 20;

freqValues[i] = freq;

}

const magValues = new Float32Array(len);

const phaseValues = new Float32Array(len);

// clone the filter to remove any connections which may be changing the value

const filterClone = this.context.createBiquadFilter();

filterClone.type = this.type;

filterClone.Q.value = this.Q.value;

filterClone.frequency.value = this.frequency.value;

filterClone.gain.value = this.gain.value;

filterClone.getFrequencyResponse(freqValues, magValues, phaseValues);

return magValues;

}

dispose() {

super.dispose();

this._filter.disconnect();

this.Q.dispose();

this.frequency.dispose();

this.gain.dispose();

this.detune.dispose();

return this;

}

}

/**

* Tone.Filter is a filter which allows for all of the same native methods

* as the [BiquadFilterNode](http://webaudio.github.io/web-audio-api/#the-biquadfilternode-interface).

* Tone.Filter has the added ability to set the filter rolloff at -12

* (default), -24 and -48.

* @example

* const filter = new Tone.Filter(1500, "highpass").toDestination();

* filter.frequency.rampTo(20000, 10);

* const noise = new Tone.Noise().connect(filter).start();

* @category Component

*/

class Filter extends ToneAudioNode {

constructor() {

super(optionsFromArguments(Filter.getDefaults(), arguments, ["frequency", "type", "rolloff"]));

this.name = "Filter";

this.input = new Gain({ context: this.context });

this.output = new Gain({ context: this.context });

this._filters = [];

const options = optionsFromArguments(Filter.getDefaults(), arguments, ["frequency", "type", "rolloff"]);

this._filters = [];

this.Q = new Signal({

context: this.context,

units: "positive",

value: options.Q,

});

this.frequency = new Signal({

context: this.context,

units: "frequency",

value: options.frequency,

});

this.detune = new Signal({

context: this.context,

units: "cents",

value: options.detune,

});

this.gain = new Signal({

context: this.context,

units: "decibels",

convert: false,

value: options.gain,

});

this._type = options.type;

this.rolloff = options.rolloff;

readOnly(this, ["detune", "frequency", "gain", "Q"]);

}

static getDefaults() {

return Object.assign(ToneAudioNode.getDefaults(), {

Q: 1,

detune: 0,

frequency: 350,

gain: 0,

rolloff: -12,

type: "lowpass",

});

}

/**

* The type of the filter. Types: "lowpass", "highpass",

* "bandpass", "lowshelf", "highshelf", "notch", "allpass", or "peaking".

*/

get type() {

return this._type;

}

set type(type) {

const types = ["lowpass", "highpass", "bandpass",

"lowshelf", "highshelf", "notch", "allpass", "peaking"];

assert(types.indexOf(type) !== -1, `Invalid filter type: ${type}`);

this._type = type;

this._filters.forEach(filter => filter.type = type);

}

/**

* The rolloff of the filter which is the drop in db

* per octave. Implemented internally by cascading filters.

* Only accepts the values -12, -24, -48 and -96.

*/

get rolloff() {

return this._rolloff;

}

set rolloff(rolloff) {

const rolloffNum = isNumber(rolloff) ? rolloff : parseInt(rolloff, 10);

const possibilities = [-12, -24, -48, -96];

let cascadingCount = possibilities.indexOf(rolloffNum);

// check the rolloff is valid

assert(cascadingCount !== -1, `rolloff can only be ${possibilities.join(", ")}`);

cascadingCount += 1;

this._rolloff = rolloffNum;

this.input.disconnect();

this._filters.forEach(filter => filter.disconnect());

this._filters = new Array(cascadingCount);

for (let count = 0; count < cascadingCount; count++) {

const filter = new BiquadFilter({

context: this.context,

});

filter.type = this._type;

this.frequency.connect(filter.frequency);

this.detune.connect(filter.detune);

this.Q.connect(filter.Q);

this.gain.connect(filter.gain);

this._filters[count] = filter;

}

this._internalChannels = this._filters;

connectSeries(this.input, ...this._internalChannels, this.output);

}

/**

* Get the frequency response curve. This curve represents how the filter

* responses to frequencies between 20hz-20khz.

* @param len The number of values to return

* @return The frequency response curve between 20-20kHz

*/

getFrequencyResponse(len = 128) {

const filterClone = new BiquadFilter({

frequency: this.frequency.value,

gain: this.gain.value,

Q: this.Q.value,

type: this._type,

detune: this.detune.value,

});

// start with all 1s

const totalResponse = new Float32Array(len).map(() => 1);

this._filters.forEach(() => {

const response = filterClone.getFrequencyResponse(len);

response.forEach((val, i) => totalResponse[i] *= val);

});

filterClone.dispose();

return totalResponse;

}

/**

* Clean up.

*/

dispose() {

super.dispose();

this._filters.forEach(filter => {

filter.dispose();

});

writable(this, ["detune", "frequency", "gain", "Q"]);

this.frequency.dispose();

this.Q.dispose();

this.detune.dispose();

this.gain.dispose();

return this;

}

}

/**

* FrequencyEnvelope is an [[Envelope]] which ramps between [[baseFrequency]]

* and [[octaves]]. It can also have an optional [[exponent]] to adjust the curve

* which it ramps.

* @example

* const oscillator = new Tone.Oscillator().toDestination().start();

* const freqEnv = new Tone.FrequencyEnvelope({

* attack: 0.2,

* baseFrequency: "C2",

* octaves: 4

* });

* freqEnv.connect(oscillator.frequency);

* freqEnv.triggerAttack();

* @category Component

*/

class FrequencyEnvelope extends Envelope {

constructor() {

super(optionsFromArguments(FrequencyEnvelope.getDefaults(), arguments, ["attack", "decay", "sustain", "release"]));

this.name = "FrequencyEnvelope";

const options = optionsFromArguments(FrequencyEnvelope.getDefaults(), arguments, ["attack", "decay", "sustain", "release"]);

this._octaves = options.octaves;

this._baseFrequency = this.toFrequency(options.baseFrequency);

this._exponent = this.input = new Pow({

context: this.context,

value: options.exponent

});

this._scale = this.output = new Scale({

context: this.context,

min: this._baseFrequency,

max: this._baseFrequency * Math.pow(2, this._octaves),

});

this._sig.chain(this._exponent, this._scale);

}

static getDefaults() {

return Object.assign(Envelope.getDefaults(), {

baseFrequency: 200,

exponent: 1,

octaves: 4,

});

}

/**

* The envelope's minimum output value. This is the value which it

* starts at.

*/

get baseFrequency() {

return this._baseFrequency;

}

set baseFrequency(min) {

const freq = this.toFrequency(min);

assertRange(freq, 0);

this._baseFrequency = freq;

this._scale.min = this._baseFrequency;

// update the max value when the min changes

this.octaves = this._octaves;

}

/**

* The number of octaves above the baseFrequency that the

* envelope will scale to.

*/

get octaves() {

return this._octaves;

}

set octaves(octaves) {

this._octaves = octaves;

this._scale.max = this._baseFrequency * Math.pow(2, octaves);

}

/**

* The envelope's exponent value.

*/

get exponent() {

return this._exponent.value;

}

set exponent(exponent) {

this._exponent.value = exponent;

}

/**

* Clean up

*/

dispose() {

super.dispose();

this._exponent.dispose();

this._scale.dispose();

return this;

}

}

/**

* MonoSynth is composed of one `oscillator`, one `filter`, and two `envelopes`.

* The amplitude of the Oscillator and the cutoff frequency of the

* Filter are controlled by Envelopes.

*  * @example

* const synth = new Tone.MonoSynth({

* oscillator: {

* type: "square"

* },

* envelope: {

* attack: 0.1

* }

* }).toDestination();

* synth.triggerAttackRelease("C4", "8n");

* @category Instrument

*/

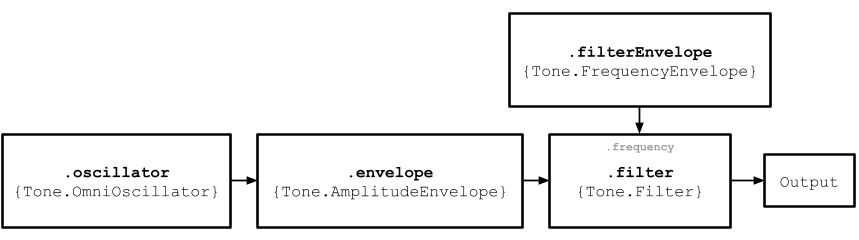

class MonoSynth extends Monophonic {

constructor() {

super(optionsFromArguments(MonoSynth.getDefaults(), arguments));

this.name = "MonoSynth";

const options = optionsFromArguments(MonoSynth.getDefaults(), arguments);

this.oscillator = new OmniOscillator(Object.assign(options.oscillator, {

context: this.context,

detune: options.detune,

onstop: () => this.onsilence(this),

}));

this.frequency = this.oscillator.frequency;

this.detune = this.oscillator.detune;

this.filter = new Filter(Object.assign(options.filter, { context: this.context }));

this.filterEnvelope = new FrequencyEnvelope(Object.assign(options.filterEnvelope, { context: this.context }));

this.envelope = new AmplitudeEnvelope(Object.assign(options.envelope, { context: this.context }));

// connect the oscillators to the output

this.oscillator.chain(this.filter, this.envelope, this.output);

// connect the filter envelope

this.filterEnvelope.connect(this.filter.frequency);

readOnly(this, ["oscillator", "frequency", "detune", "filter", "filterEnvelope", "envelope"]);

}

static getDefaults() {

return Object.assign(Monophonic.getDefaults(), {

envelope: Object.assign(omitFromObject(Envelope.getDefaults(), Object.keys(ToneAudioNode.getDefaults())), {

attack: 0.005,

decay: 0.1,

release: 1,

sustain: 0.9,

}),

filter: Object.assign(omitFromObject(Filter.getDefaults(), Object.keys(ToneAudioNode.getDefaults())), {

Q: 1,

rolloff: -12,

type: "lowpass",

}),

filterEnvelope: Object.assign(omitFromObject(FrequencyEnvelope.getDefaults(), Object.keys(ToneAudioNode.getDefaults())), {

attack: 0.6,

baseFrequency: 200,

decay: 0.2,

exponent: 2,

octaves: 3,

release: 2,

sustain: 0.5,

}),

oscillator: Object.assign(omitFromObject(OmniOscillator.getDefaults(), Object.keys(Source.getDefaults())), {

type: "sawtooth",

}),

});

}

/**

* start the attack portion of the envelope

* @param time the time the attack should start

* @param velocity the velocity of the note (0-1)

*/

_triggerEnvelopeAttack(time, velocity = 1) {

this.envelope.triggerAttack(time, velocity);

this.filterEnvelope.triggerAttack(time);

this.oscillator.start(time);

if (this.envelope.sustain === 0) {

const computedAttack = this.toSeconds(this.envelope.attack);

const computedDecay = this.toSeconds(this.envelope.decay);

this.oscillator.stop(time + computedAttack + computedDecay);

}

}

/**

* start the release portion of the envelope

* @param time the time the release should start

*/

_triggerEnvelopeRelease(time) {

this.envelope.triggerRelease(time);

this.filterEnvelope.triggerRelease(time);

this.oscillator.stop(time + this.toSeconds(this.envelope.release));

}

getLevelAtTime(time) {

time = this.toSeconds(time);

return this.envelope.getValueAtTime(time);

}

dispose() {

super.dispose();

this.oscillator.dispose();

this.envelope.dispose();

this.filterEnvelope.dispose();

this.filter.dispose();

return this;

}

}

/**

* DuoSynth is a monophonic synth composed of two [[MonoSynths]] run in parallel with control over the

* frequency ratio between the two voices and vibrato effect.

* @example

* const duoSynth = new Tone.DuoSynth().toDestination();

* duoSynth.triggerAttackRelease("C4", "2n");

* @category Instrument

*/

class DuoSynth extends Monophonic {

constructor() {

super(optionsFromArguments(DuoSynth.getDefaults(), arguments));

this.name = "DuoSynth";

const options = optionsFromArguments(DuoSynth.getDefaults(), arguments);

this.voice0 = new MonoSynth(Object.assign(options.voice0, {

context: this.context,

onsilence: () => this.onsilence(this)

}));

this.voice1 = new MonoSynth(Object.assign(options.voice1, {

context: this.context,

}));

this.harmonicity = new Multiply({

context: this.context,

units: "positive",

value: options.harmonicity,

});

this._vibrato = new LFO({

frequency: options.vibratoRate,

context: this.context,

min: -50,

max: 50

});

// start the vibrato immediately

this._vibrato.start();

this.vibratoRate = this._vibrato.frequency;

this._vibratoGain = new Gain({

context: this.context,

units: "normalRange",

gain: options.vibratoAmount

});

this.vibratoAmount = this._vibratoGain.gain;

this.frequency = new Signal({

context: this.context,

units: "frequency",

value: 440

});

this.detune = new Signal({

context: this.context,

units: "cents",

value: options.detune

});

// control the two voices frequency

this.frequency.connect(this.voice0.frequency);

this.frequency.chain(this.harmonicity, this.voice1.frequency);

this._vibrato.connect(this._vibratoGain);

this._vibratoGain.fan(this.voice0.detune, this.voice1.detune);

this.detune.fan(this.voice0.detune, this.voice1.detune);

this.voice0.connect(this.output);

this.voice1.connect(this.output);

readOnly(this, ["voice0", "voice1", "frequency", "vibratoAmount", "vibratoRate"]);

}

getLevelAtTime(time) {

time = this.toSeconds(time);

return this.voice0.envelope.getValueAtTime(time) + this.voice1.envelope.getValueAtTime(time);

}

static getDefaults() {

return deepMerge(Monophonic.getDefaults(), {

vibratoAmount: 0.5,

vibratoRate: 5,

harmonicity: 1.5,